From Intuition to Evidence: The True Impact of AI on Developer Productivity

A comprehensive analysis for tech leaders making strategic AI adoption decisions

As CTOs navigate the rapidly evolving landscape of AI-assisted development, one fundamental question persists: Does AI actually deliver measurable productivity gains in real-world enterprise environments?

A groundbreaking year-long enterprise study involving 300 engineers across multiple teams provides the most comprehensive answer to date. The research paper “Intuition to Evidence: Measuring AI’s True Impact on Developer Productivity” offers unprecedented insights into how AI tools perform beyond controlled benchmarks, in a complex reality of production software development.

TL;DR

The Bottom Line: A year-long enterprise study of 300 engineers proves AI delivers measurable productivity gains when implemented strategically.

Key numbers:

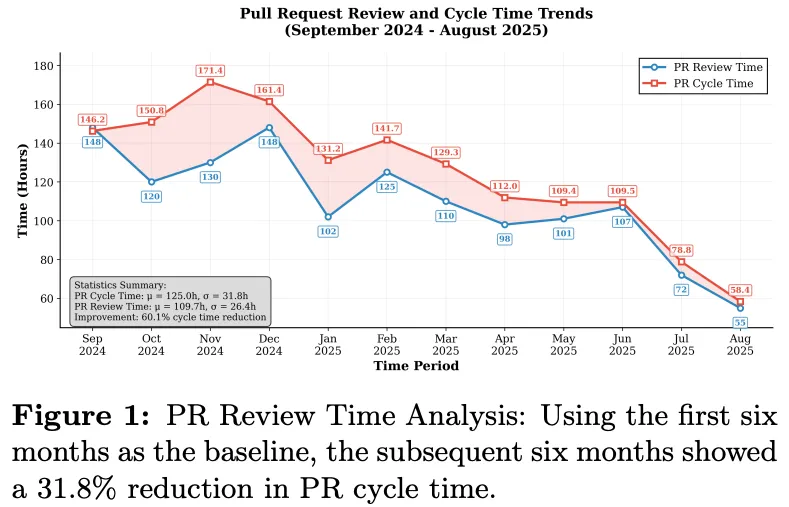

- 31.8% faster PR reviews

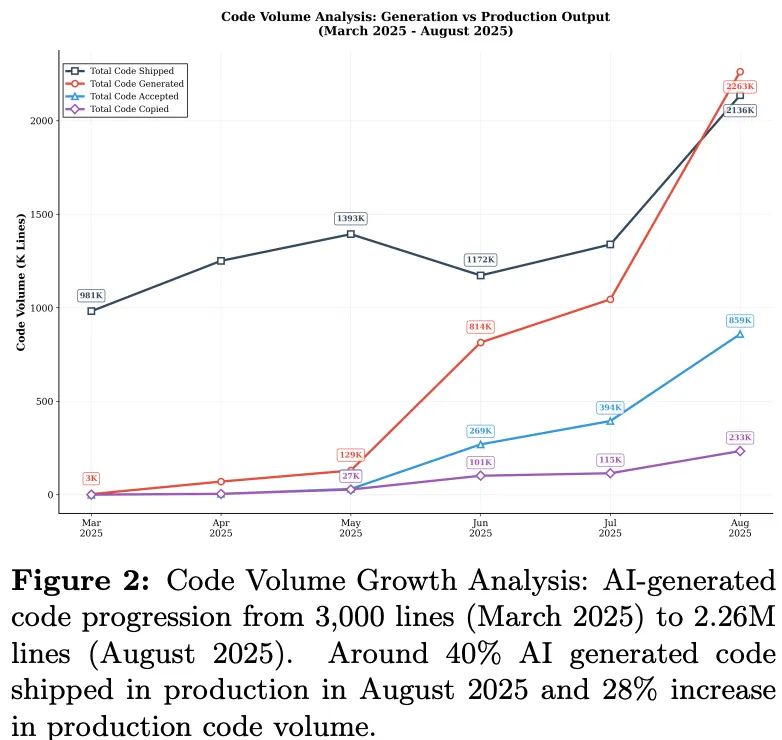

- 61% productivity boost in shipping code for high adopters

- 28% overall code volume increase

- 85% developer satisfaction

- Costs under 2% of engineering budgets.

Critical success factors:

- Proper training

- Workflow integration

- Sustained adoption—not just tool deployment.

Action Required: The AI productivity advantage is real but execution-dependent. Organizations with strategic implementation see exponential returns; those with poor adoption see productivity declines.

- This aligns with LegacyAI’s Software 3.0 vision of development velocity through AI-coordinated teams rather than individual AI assistance.*

The Enterprise Reality Check

While existing research has demonstrated promising results—McKinsey studies show developers completing coding tasks up to twice as fast with generative AI¹, and GitHub’s controlled experiments found 55.8% faster task completion²—this enterprise research stands apart by examining longitudinal, real-world deployment at scale. Unlike controlled experiments with standardized datasets, this study tracked actual engineering teams using AI tools across their entire development lifecycle—from code generation to review cycles.

The timing is critical: 73% of open source contributors now use AI tools³, and McKinsey estimates that direct AI impact on software engineering productivity could range from 20-45% of current annual spending on the function⁴. However, most enterprise studies have focused on short-term gains rather than sustained, organization-wide transformation.

Key Findings That Should Shape Your AI Strategy

The Numbers That Matter: AI Productivity at Scale

| Metric | Impact | Context |

|---|---|---|

| PR Review Time | 31.8% reduction | From 150.5h to 99.6h cycle time |

| Code Volume | 28% overall increase | Sustained across organization |

| Top Adopters | 61% shipped code boost | High-engagement users |

| Developer Satisfaction | 85% for code review | 93% want to continue |

| Cost Per Engineer | $30-34/month | Just 1-2% of engineering costs |

| Peak Adoption | 83% active usage | Month 6 engagement |

1. Statistically Significant Productivity Gains

- 31.8% reduction in PR review cycle time (from 150.5h to 99.6h)

- 28% increase in overall code shipment volume

- 61% code delivery boost for top AI tool adopters

- 40% of production code generated through AI assistance by month(6)

Try LegacyAI’s Software 3.0 - development velocity through AI-coordinated teams rather than individual AI assistance.

2. Adoption Patterns Reveal Critical Success Factors

The study uncovered a clear “adoption-effect gradient”:

- High adopters: 61% increase in shipped code

- Low adopters: 11% decline in productivity

- Peak engagement: 83% of engineers actively using AI tools by month(6)

3. ROI Analysis: The Financial Reality

Monthly operational costs averaged $30-34 per engineer—representing just 1-2% of typical engineering costs while delivering double-digit productivity improvements.

The CTO Imperative: Quality Without Compromise

Perhaps most critically for technical leadership, the study demonstrates that productivity gains don’t come at the expense of code quality:

- 37% acceptance rate for AI-generated code suggestions (compared to GitHub’s reported 30% industry average⁵)

- 85% developer satisfaction with automated code review

- 93% of engineers expressing desire to continue using AI tools

This aligns with broader industry trends: Accenture’s enterprise study found 96% success rates among initial GitHub Copilot users, with 90% of developers reporting greater job fulfillment⁶. However, quality concerns remain significant—Deloitte research shows success rates drop from 90% for simple prompts to 42% for complex ones⁷, emphasizing the need for strategic implementation.

These metrics indicate that developers aren’t just accepting AI assistance—they’re actively choosing to integrate it into their workflows when properly deployed.

Strategic Implications for Technical Leadership

1. The Implementation Gap

The study reveals a crucial insight: success isn’t guaranteed simply by providing AI tools. The 72.7% difference between high and low adopters highlights that implementation strategy matters more than tool selection.

This validates LegacyAI’s approach to AI coordination rather than just AI assistance—transforming developers into AI Coordination Leaders who orchestrate intelligent virtual squads rather than simply receiving code suggestions.

2. Experience Level Optimization

- Junior engineers (SDE1): 77% productivity increase

- Senior engineers (SDE3): 45% productivity increase

This suggests AI tools can be particularly effective for accelerating junior developer onboarding and reducing the traditional mentoring burden on senior staff.

Explore how LegacyAI’s Virtual Squad Architecture provides specialized AI teams(Architecture AI, Dev AI, Quality AI) that can amplify productivity gains across all experience levels.

3. Workflow Integration is Critical

The research emphasizes that successful AI adoption requires:

- Comprehensive training programs beyond basic tool introduction

- Workflow modifications to integrate AI suggestions into existing code review processes

- Ongoing monitoring to ensure sustained effectiveness

Beyond the Hype: Practical Deployment Challenges

The study candidly addresses implementation realities often overlooked in vendor presentations, corroborating findings from other enterprise research:

Technical Challenges:

- Initial latency issues requiring optimization to sub-500ms response times

- Context window limitations for large codebases requiring sophisticated multi-model architectures⁸

- Complex integration with existing development toolchains

Human Factors:

- Trust building requires time and positive experiences—Microsoft research shows 11 weeks for users to fully realize productivity gains⁹

- More extensive training needed than initially anticipated (Deloitte found 38% of developers lack confidence in AI-generated results⁷)

- Teams must modify existing processes to maximize benefits

The Productivity Paradox: Notably, a 2025 independent study found developers taking 19% longer to complete tasks when using AI tools, despite expecting 24% improvements¹⁰. This highlights the critical importance of proper implementation strategies and training programs.

The Developer Modernization Imperative

This research arrives at a pivotal moment for enterprise technology leadership. As development cycles accelerate and talent acquisition becomes increasingly competitive, AI-assisted development isn’t just an opportunity—it’s becoming a competitive necessity.

The market context is compelling: the AI code review tool market is projected to reach U$750 million in 2025 with a 25.2% CAGR through 2033 ¹¹, while the broader AI code tools market is expected to grow from U$6.7 billion in 2024 to U$25.7 billion by 2030 ¹². McKinsey’s analysis suggests AI could boost global GDP by over U$1.5 trillion through developer productivity gains alone¹³.

The study’s longitudinal data provides the evidence base CTOs need to move beyond experimental AI adoption toward strategic, organization-wide implementation.

Transform Your Development Organization: From Individual AI Tools to Coordinated Intelligence

The research reveals a fundamental truth: individual AI assistance tools are just the beginning. The organizations achieving 20-45% productivity gains aren’t just using AI—they’re orchestrating it strategically.

The Evolution from Software 2.0 to 3.0

The study data points to an inevitable transition:

- Software 1.0 (1950s-2000s): Manual coding by individual developers

- Software 2.0 (2020-2024): AI-assisted copilot tools providing suggestions

- Software 3.0 (2024-Future): AI-coordinated intelligent teams working in parallel

LegacyAI represents this next evolutionary leap—transforming your developers from individual contributors into AI Coordination Leaders who manage intelligent virtual squads.

Why Virtual Squads Outperform Individual AI Tools

The research shows that high adopters achieve 61% productivity gains not through better individual AI assistance, but through systematic coordination. LegacyAI’s Virtual Squad Architecture delivers:

- 🎯 3x Development Velocity through parallel AI coordination

- 🎯 30% Reduction in Coordination Overhead via intelligent task orchestration

- 🎯 Exponential Team Expansion without proportional hiring

- 🎯 Enterprise-Grade Security with SOC 2 Type II compliance

The Competitive Imperative

The research data is unambiguous: organizations implementing strategic AI coordination are achieving 61% code shipping advantages over those using basic AI tools. The question isn’t whether your competitors will adopt AI coordination—it’s whether you’ll lead or follow.

Ready to Transform Your Development Organization? - Schedule Your LegacyAI Strategic Consultation →

Discover how to evolve from Software 2.0 AI assistance to Software 3.0 AI coordination, achieving the productivity gains demonstrated in this groundbreaking research.

Transform your development organization with evidence-based AI implementation strategies. Because in today’s competitive landscape, productivity isn’t just about working faster—it’s about working exponentially smarter.

References

-

McKinsey & Company. “Unleashing developer productivity with generative AI.” McKinsey Digital, 2024. Available: https://www.mckinsey.com/capabilities/mckinsey-digital/our-insights/unleashing-developer-productivity-with-generative-ai

-

Peng, S., Kalliamvakou, E., Cihon, P., & Demirer, M. “The Impact of AI on Developer Productivity: Evidence from GitHub Copilot.” arXiv preprint arXiv:2302.06590, 2023.

-

GitHub. “2024 Open Source Survey.” GitHub Blog, 2024.

-

McKinsey & Company. “The economic potential of generative AI: The next productivity frontier.” McKinsey Digital, 2024.

-

GitHub. “Research: Quantifying GitHub Copilot’s impact on developer productivity and happiness.” GitHub Blog, 2023.

-

GitHub & Accenture. “Research: Quantifying GitHub Copilot’s impact in the enterprise with Accenture.” GitHub Blog, 2024.

-

Deloitte. “AI and software development quality.” Deloitte Insights, 2024. Available: https://www.deloitte.com/us/en/insights/industry/technology/how-can-organizations-develop-quality-software-in-age-of-gen-ai.html

-

Qodo. “AI Code Review and the Best AI Code Review Tools in 2025.” Qodo Blog, 2025.

-

Microsoft Research. “Measuring GitHub Copilot’s Impact on Productivity.” Communications of the ACM, 2024.

-

METR. “Measuring the Impact of Early-2025 AI on Experienced Open-Source Developer Productivity.” METR Research, July 2025.

-

Market Report Analytics. “AI Code Review Tool Growth Pathways: Strategic Analysis and Forecasts 2025-2033.” 2025.

-

ZenCoder AI. “AI Code Generation Trends: Shaping the Future of Software Development in 2025 and Beyond.” 2025.

-

McKinsey & Company. “The economic impact of the AI-powered developer lifecycle.” McKinsey Research, 2024.

Primary Source: Kumar, A., et al. (2025). “Intuition to Evidence: Measuring AI’s True Impact on Developer Productivity.” arXiv preprint arXiv:2509.19708, 2025.